Do We Still Need Scientific Journals?

360 years since the first science journal was launched, researchers examine the value of the peer-review process today.

by Foundational Questions Institute

March 9, 2025

"The peer-review system is basically broken," says Ivan Oransky, co-founder of

Retraction Watch, a database that collates cases of misconduct, forcing academic papers to be pulled from journals. "It's been corrupted by bad actors and by an incentive structure for 'publish or perish' that rewards quantity over quality," he says.

Oransky echoes the concerns raised by many scientists in recent years, who have argued that the bloated scientific-publishing industry is churning out an increasingly high volume of low-quality papers. While megapublishers are estimated to rake in around US$20 billion a year, the academic reviewers who judge whether submitted papers are worthy of publication are often unpaid volunteers, while the papers' authors are themselves often charged substantial fees to publish. Critics argue that, at best, the peer-review process is not serving the research community efficiently and, at worst, it exploits academics and aids public misinformation.

FQxI recently surveyed 73 of its scientist members for their opinions on peer review. The survey found that 63% feel that they are judged more by publication metrics—measures of the quantity they publish, the number of citations their papers garner, and the prestige of the journals in which they publish—than by the actual quality of their work and the content of their research. Reporters

Brendan Foster,

Miriam Frankel,

Zeeya Merali and

Colin Stuart spoke with a range of researchers and journal editors about some of the issues that were raised by the survey. Given an alleged fall in standards of costly journals and the rise in popularity of free open-access online preprint repositories, is there still value to be found in the peer-review process?

The current system for science publishing dates back nearly four centuries. The world's first and longest-running science journal, the UK Royal Society's

Philosophical Transactions, celebrates its 360th anniversary this month. Launched by Henry Oldenburg in March 1665, it appeared monthly and cost a shilling—and it defined many of the attributes associated with journals today. "Science journals establish priority of discovery, register claims and preserve them long term, and also act as a system for the organized scrutiny of those claims by the community, both before publication and after," says Tony Ross-Hellauer, leader of the

Open and Reproducible Research Group, at the Graz University of Technology in Styria, Austria. "It's quite a remarkable system."

Today, academic researchers are still expected to write up their research findings in an accessible manner, so that others can reproduce and build on their work. These papers can be shared to free online preprint servers, without substantial vetting. However, papers only receive the stamp of authority that many university hiring and promotion committees crave, after being officially published in a journal. The process for publication involves the paper being reviewed by two or three usually anonymous experts in the field (who may or may not know the identity of the paper's authors, depending on the journal). The reviewers then send back reports to the journal's editor, sometimes calling for revisions prior to publication, or recommending rejection. The more prestigious the journal, and the more that other papers cite the work, the better the reputation bump for the paper's authors.

There was scientific integrity and quality.

- Abhay Ashtekar

This peer-review system worked well for an impressively long period of history, notes Abhay Ashtekar, a physicist at Pennsylvania State University in State College, even if the pace seems leisurely by today's standards. Ashtekar has sat on the editorial boards of numerous journals, most recently that of the journal

Physical Review Letters (

PRL), published by the American Physical Society (APS). He published his first paper more than half a century ago, when the peer-review process was carried out by mail, with preprints posted to the 15 or so colleagues who may be interested in the topic. "You would get maybe one review request a month, with reviewers given ample time to review," Ashtekar recalls. "It was slow, but it also gave us breathing space, and referees understood the papers, and their reports had valid criticisms."

Peer review became the gold standard for certifying the credibility of research. "There was scientific integrity and quality," says Ashtekar.

Things sped up dramatically in the 1990s, however, with the arrival of the Internet. Preprints in physics that were once sent out by mail now whizzed across the globe by email, in huge numbers, and it became increasingly evident that there was need for some central storage facility. In 1991, physicist Paul Ginsparg set up an online repository for physics papers at the Los Alamos National Laboratory, in New Mexico. This repository would eventually be housed at

arXiv.org. "When the arXiv came along, it exploded the way that people publish, blew it all up," says Raissa D'Souza, a computer scientist and engineer at the University of California, Davis, who founded the APS journal

Physical Review Research and is a member of the board of reviewing editors for the journal

Science, published by the American Association for the Advancement of Science. "A lot of incredibly high-quality papers get published on the arXiv," she says. ArXiv took some of the control away from publishing houses, D'Souza says, and "gave the power back to the individual and the community."

Scientific print journals took a while to catch on to the opportunities offered by the Internet age, recalls Jorge Pullin, a physicist at Louisiana State University in Baton Rouge. "Tradition dies slow in academia," he says. Pullin was on the board of the Institute of Physics'

New Journal of Physics, the first open-access, online journal in physics, when it launched in 1998. "We got all sorts of crazy questions, like, 'what would happen if there is no more electricity?'" Pullin recalls. "And I would say, 'if there's no more electricity, I don't think this journal is going to be people's top concern.'"

These days, arXiv receives around

20,000 submissions a month and hosts over 2.6 million papers. It offers free and fast access to papers, with only light-touch vetting by volunteer moderators to screen out the more obviously wild papers submitted. Such is the popularity of arXiv, and other preprint servers, that 59% of FQxI's survey respondents reported learning of important results first from preprints, compared with just 15% who said that they first learn of advances from journals. "In mathematical physics, very often the time between sending your article to the journal and getting it published might be up to two years," says Kasia Rejzner, a mathematician at the University of York, UK and president of the

International Association of Mathematical Physics. "People just refer to the arXiv version for years before it actually gets published," she adds.

Oransky also works with the

Simons Foundation, which now provides financial support to help modernize arXiv. He says that there is another, perhaps counterintuitive, advantage that arXiv has over traditional journals: with arXiv, there is no pretence that the papers have reached some mythical standard of correctness. "They are quite directly labeled as not peer reviewed," Oransky says. "But it leads to conversations about those papers."

By contrast, the 'peer review' label can confuse people into thinking that a journal paper has been rigorously vetted, without realizing that there are limits to what reviewers are actually expected to check. For instance, reviewers do not typically get their hands dirty reproducing experiments, scrutinizing lines of computer code, or going through detailed mathematical calculations. Rather their role is primarily to provide 'sanity checks' that the work submitted appears to be reasonable and is not majorly flawed. This is a point that is understood by researchers, but possibly less so by the public, notes Ross-Hellauer. In FQxI's survey 82% of respondents (all of whom are, or have been, professional scientists) said that in their opinion the credibility of peer-reviewed papers is limited (with many noting that mistakes are to be expected and do not necessarily detract from the value of peer review overall), while only one percent of respondents believed that the public is also aware of these limitations. "You'll see people saying on Reddit threads that 'this is a peer-reviewed publication,' as if peer review is this golden halo," Ross-Hellauer says. "That's always striking to see once you have investigated the peer-review process."

In computer science, a lot of very influential, very novel studies were rejected multiple times by journals—maybe for being too novel for the time.

- Fengyuan Liu

There have also been some embarrassing high-profile cases of journals rejecting papers that were eventually revealed to be outstanding. For instance, Katalin Kariko and colleagues' Nobel-prize winning paper on mRNA vaccines was originally rejected by the prestigious journals

Nature (published by Springer Nature) and

Science. "In computer science, a lot of very influential, very novel studies were rejected multiple times by journals—maybe for being too novel for the time," says Fengyuan Liu, a doctoral student in computer science at New York University in Abu Dhabi. "Some didn't even get published at all and just remained as preprints, yet received tens of thousands of citations," he adds.

Which begs the question of whether there is any value in submitting studies to peer-reviewed journals at all, anymore. Lidia del Rio, a physicist at the University of Zurich, Switzerland, who

co-founded the non-profit journal Quantum, in 2016, argues that there are good reasons to persevere. "The main role of peer-reviewed journals is curating," she says, separating the wheat from the chaff amongst the vast field of preprints. She also adds that since fund-granting institutes use journal publications as a measure of quality, journals are "a necessary evil at the moment."

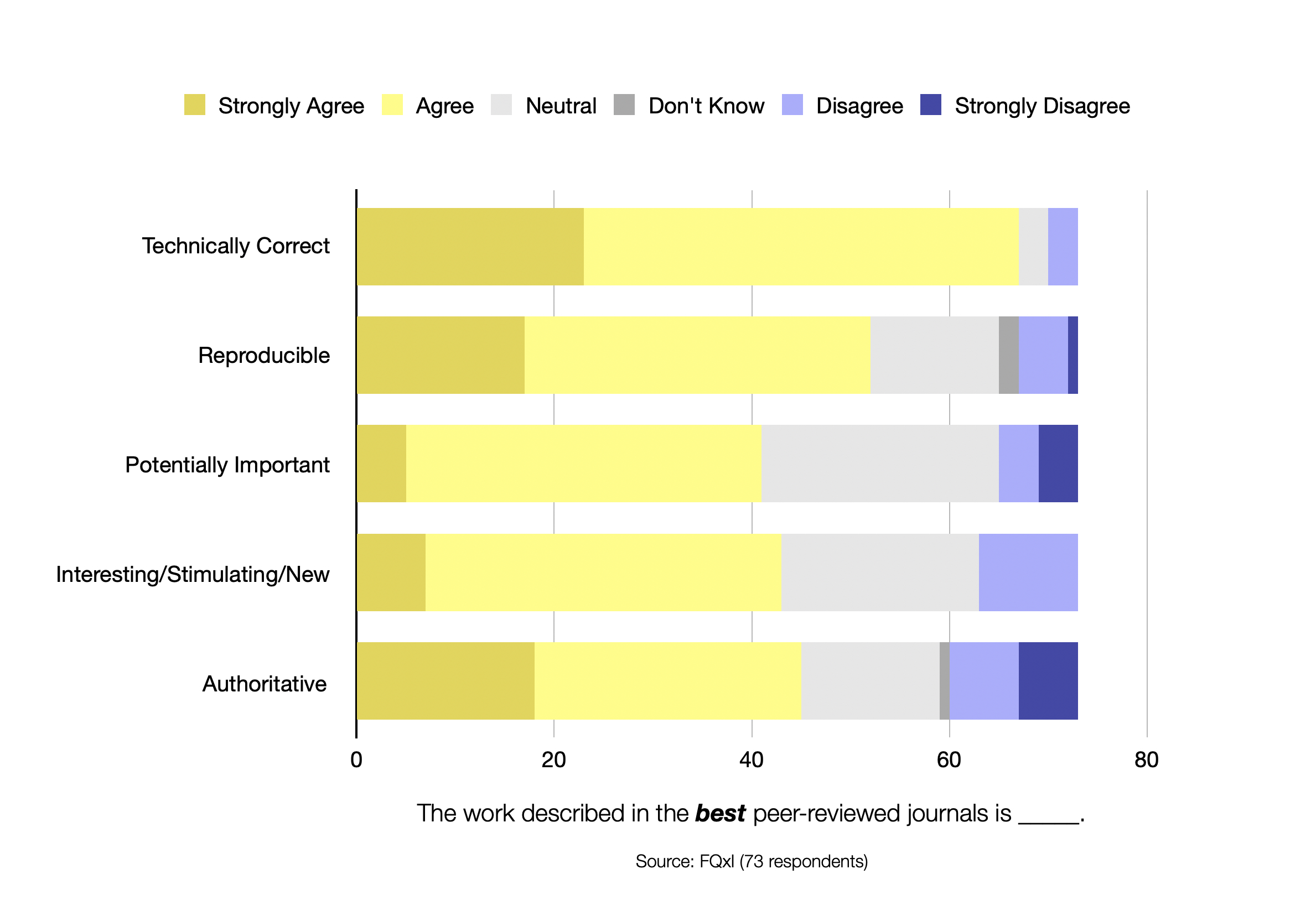

The FQxI members surveyed largely agreed that, when done well, peer review does still provide an important curation service for the community: 92% of respondents expressed confidence that the work described in the best journals in their field is technically correct; 71% were confident that the work described in the best journals is reproducible; and 62% said that peer-reviewed papers published in the best journals carry a legitimate stamp of authority. The top example listed by respondents for the 'best' journal on the market was

PRL, which was lauded for its

impressive history publishing milestone papers for more than 60 years (over 65% of the Nobel prize-winning research published in the past 40 years is included in

Physical Review journals), its high-quality papers, and its transparent and fair peer-review process, relative to other journals. In general, the family of APS journals was praised by respondents, as was the relative newcomer

Quantum, which was appreciated for its "ethos" as an open-access, non-profit journal, run by academics with subject-area experts as editors, and for its review policy encouraging verification of facts and discouraging authors from overstating their results. (It is worth noting that the majority (73%) of survey respondents were physicists.)

FQxI survey respondents were asked to indicate their confidence level that the work described in papers published in the best peer-review journals in their discipline is technically correct, reproducible, potentially important, interesting, stimulating and new, and carries a legitimate stamp of authority.

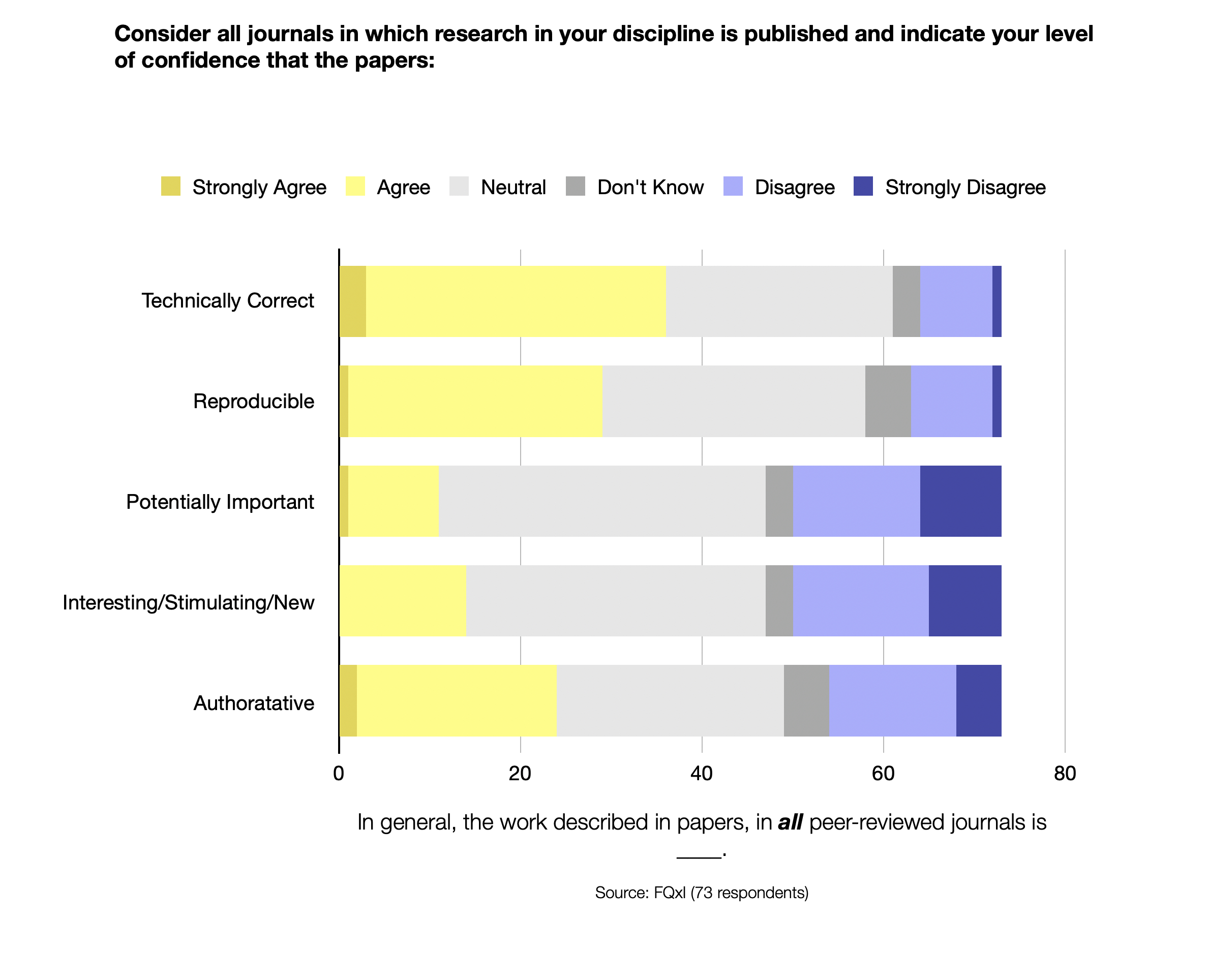

FQxI survey respondents were asked to indicate their confidence level that the work described in papers published in the best peer-review journals in their discipline is technically correct, reproducible, potentially important, interesting, stimulating and new, and carries a legitimate stamp of authority.But the confidence levels of respondents dropped significantly when asked the same questions about papers published in

all journals in their field, rather than in just the best journals. In this case, only 49% expressed confidence that the work described in the papers is technically correct, 40% that it is reproducible, and 33% that passing peer review gave papers a legitimate stamp of authority.

FQxI survey respondents were asked to indicate their confidence level that, in general, the work described in papers published in all peer-review journals in their discipline is technically correct, reproducible, potentially important, interesting, stimulating and new, and carries a legitimate stamp of authority.

FQxI survey respondents were asked to indicate their confidence level that, in general, the work described in papers published in all peer-review journals in their discipline is technically correct, reproducible, potentially important, interesting, stimulating and new, and carries a legitimate stamp of authority.Ashtekar is unsurprised by the lack of faith in the reputation of journals, in general. "The quality of published papers has definitely gone down over the past 50 years," he says.

So what's going wrong?

The main issue is the massive explosion of journals in recent years. There are now ten times as many journals as there were just ten years ago, says David V. Smith, a neuroscientist at Temple University in Philadelphia, Pennsylvania, who co-authored a

paper about ways to improve peer review, having crowd-sourced the opinions of academics on X (then Twitter). An alarming proportion of these journals can be classed as 'predatory,' charging exorbitant fees to authors to boost their own profits, while providing little in the way of quality control, in return.

The peer review system is collapsing because of a glut of papers.

- Jorge Pullin

This means that there are now far too many requests for reviewers. "If you look at the number of hours a peer review takes and multiply that by the number of peer reviews per paper, times the number of papers, and then try and map that against the number of qualified experts in that particular field, the math just doesn't work," says Oransky.

While the average number of review requests received by academics from journals, reported in FQxI's survey, was 21 per year, numbers varied wildly; respondents at the high end reported receiving around 150 requests to review papers in the past 12 months. This disproportionate demand on a subset of survey respondents fits with general trends in academia, says Smith. "80% of the peer-review workload is done by only 20% of eligible peer reviewers," he notes.

This imbalance is understandable, if unfair, adds Rejzner. "The further away from mainstream a subject is, the less people there are that are knowledgeable in that subject, so then those experts are oversubscribed with review requests," she says.

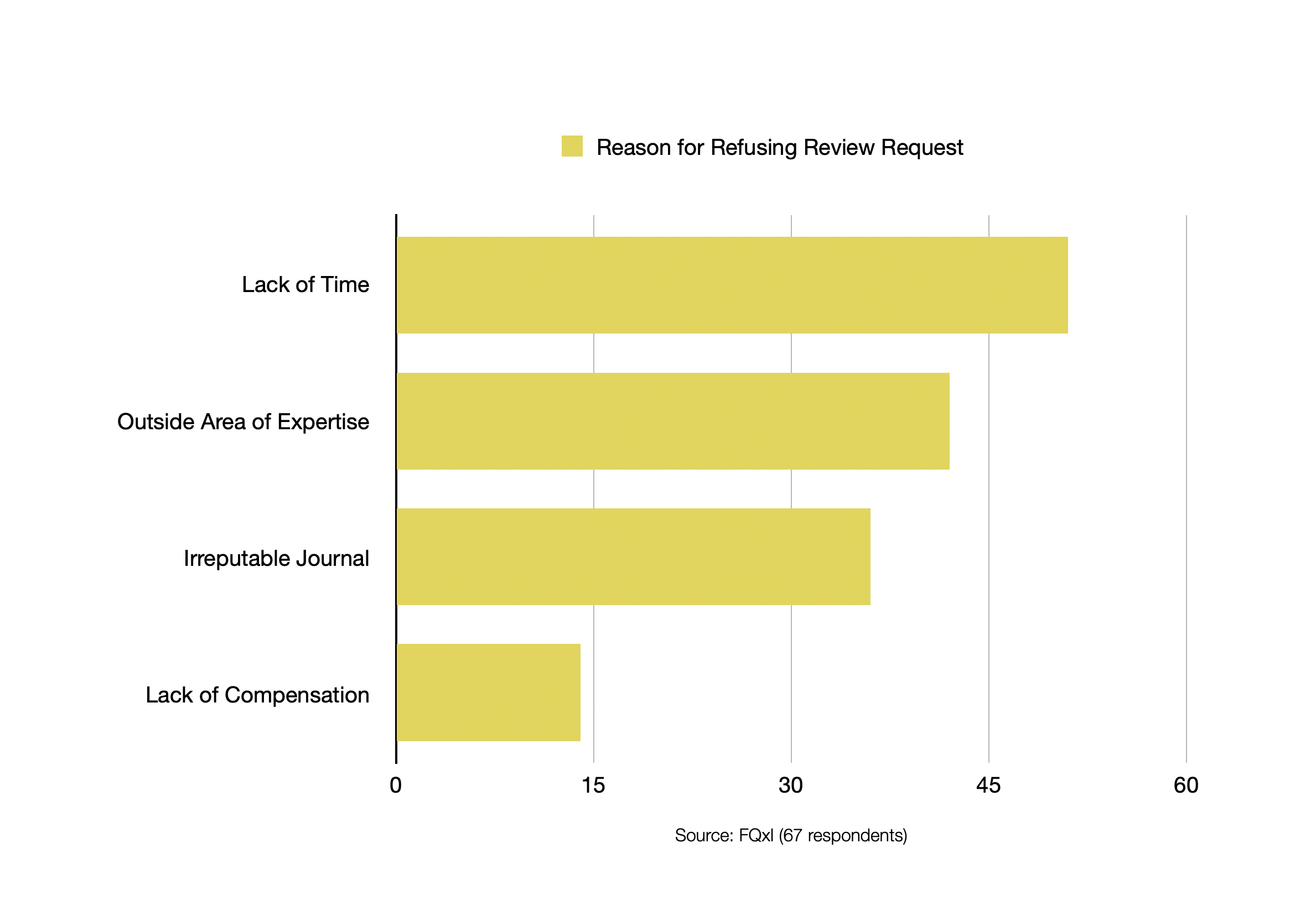

At the same time, the researchers who are called on by journal editors to carry out reviews are being pressured to publish more and more of their own work, to please funding bodies and universities; so they tend to decline review requests, due to lack of time. In FQxI's survey, 70% of respondents reported refusing a review request in the past year that they would otherwise have accepted, because of time constraints. "What happens is that 70-75% of first-choice referees turn down requests," says Ashtekar. That forces editors to approach their second, third, or fourth choice referees, who may not be experts on the topic. Indeed, 58% of FQxI survey respondents said that they had declined review requests in the past year because they lacked expertise in the topic of the paper (and many commented that they wished others would do the same, to avoid reports being written by unqualified referees).

FQxI survey respondents that had declined review requests in the past 12 months were asked to indicate their reasons for refusal.

FQxI survey respondents that had declined review requests in the past 12 months were asked to indicate their reasons for refusal.Pullin notes that some academics, when pressed for time, pass the papers on to their graduate students to ghost referee, possibly diminishing the quality of the review.

"The peer review system is collapsing because of a glut of papers," Pullin says. "It's ridiculous and it cannot go on. There has to be some sort of reckoning."

Part 2 of this series, "The Perils of Peer Review," discusses academics' dissatisfaction with megapublishers, and how an over-emphasis on publication metrics has encouraged some academics to engage in dubious practices, from misattributing credit, to plagiarism and fraud. Part 3, "How to Fix Peer Review," describes suggestions for improving science publishing, including compensating reviewers and moving towards post-publication peer review.FQxI publishes a book series in partnership with Springer Nature. Miriam Frankel and Zeeya Merali have reported for Nature

and Zeeya Merali has reported for Science

.Image credit: Royal Society